The speech flow in a video directly affects the way the audience will accept and understand information. It is difficult to follow information that is conveyed too fast or too slow, and slow speech is dull to the listeners. Natural pacing should also be maintained as an engaging aspect for creators who produce videos using AI. The devices that facilitate proper control of the speech speed and pauses clarify messages. With the rise of lip sync AI, these controls have become central to producing professional, lifelike videos that retain viewer attention without the frustration of mismatched audio or awkward timing.

Understanding Speech Speed in AI Lip Sync

The speech rate of AI videos involves matching the rate with the appropriate lip motions. It may not be possible to recreate natural mouth positions with avatars, and the avatars may have an unnatural appearance when the audio is excessively fast. Conversely, a speech that is slow can make animation look slow and awkward. The natural pacing also takes into consideration the rhythm of the natural human conversation, in which small accelerations or decelerations are used to add emphasis and emotion. Language also requires specific timing. In other languages, such as Japanese or Spanish, there is a natural variation in syllabic length (longer or shorter), and such variation must be represented in the AI lip-sync models so as to make it intelligible. No one must interfere with the realism of speech by modulating the pace, but the avatars ought never be dull.

Pause Management for Conversational Realism

Silences are an important aspect of dialogue-based content realism. Pausing may be strategically applied to emphasize key points and give the viewers time to digest. The removal of unnecessary silence does away with humiliating pauses and keeps the narrative going. Silences should be accompanied by facial expressions, such as blinking or small movements of the head, to be more realistic. Abuse of pauses can reduce a character into a machine and create less appeal to the audience. Pause management improves comprehension and ensures that the video conveys the desired tone, whether educative, persuasive, or entertaining. Breaks, together with control of speech speed, improve the quality of AI-generated content, but only under strict timing.

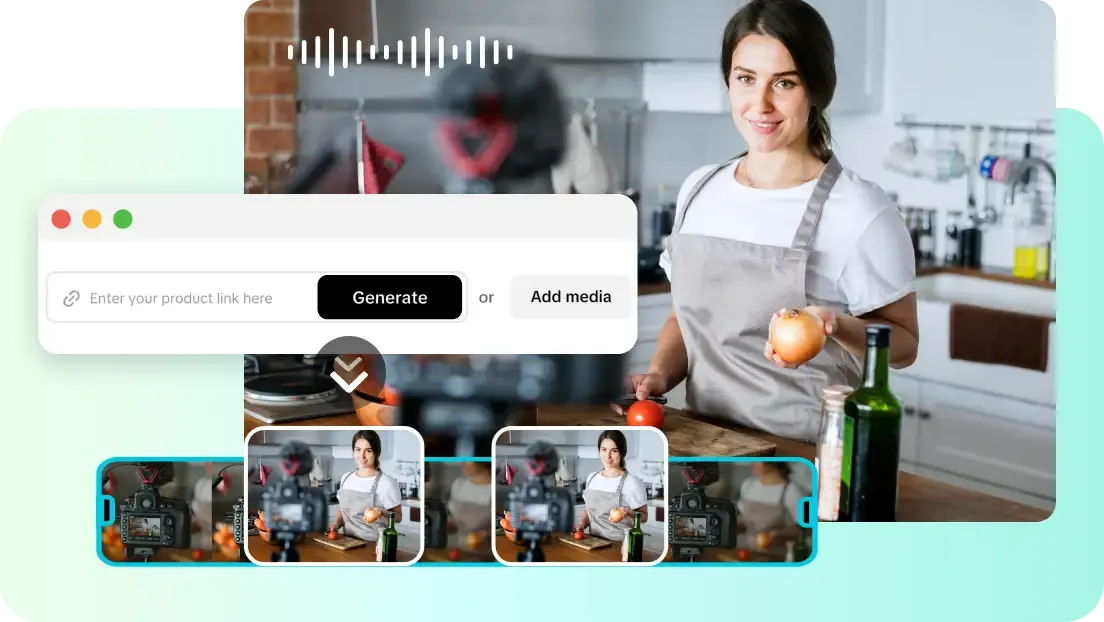

Pippit’s Speech Speed Adjustment Tools

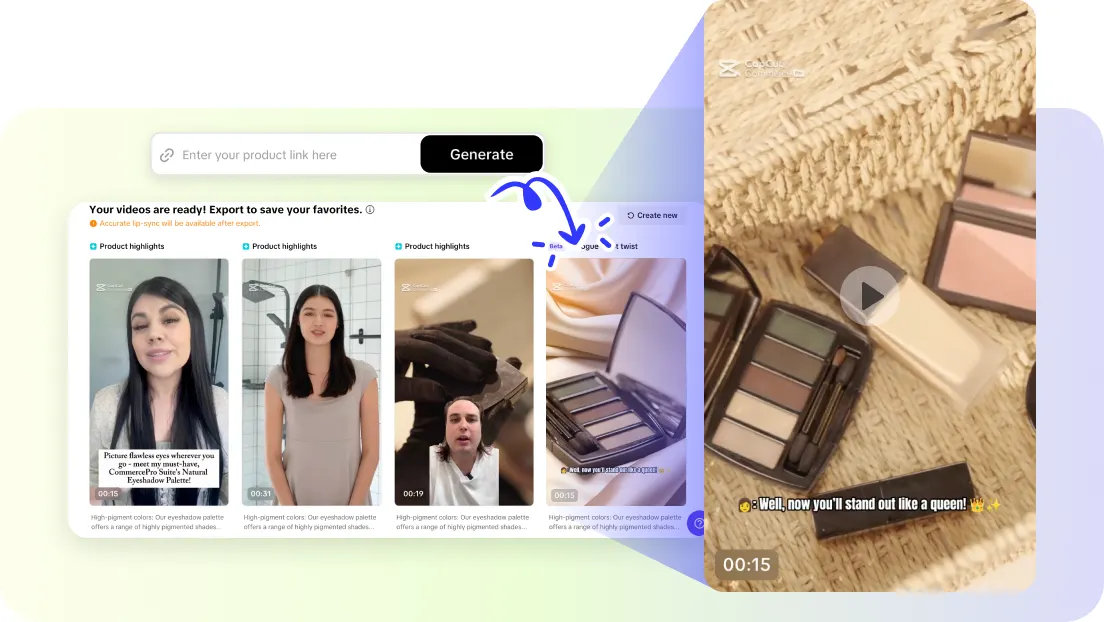

Pippit can intuitively fine-tune the pacing of speech in AI videos. The control of speed is facilitated by a slider and is precise without the complexity of audio editing. Live lip-sync correction ensures that any tempo change is instantly related to the change in mouth movements, thereby removing unnatural distortions. You are able to make changes immediately, and you can continue to make corrections until you achieve the right balance between naturalness and clarity. The tools are especially useful when it comes to translating scripts into something worth watching, whether it is a short social media video or a long educational presentation. Pippit simplifies speech adjustments by ensuring that creators achieve professional results in a hassle-free manner.

Steps to Adjust Speech Speed And Pauses Easily In Lip Sync AI Videos

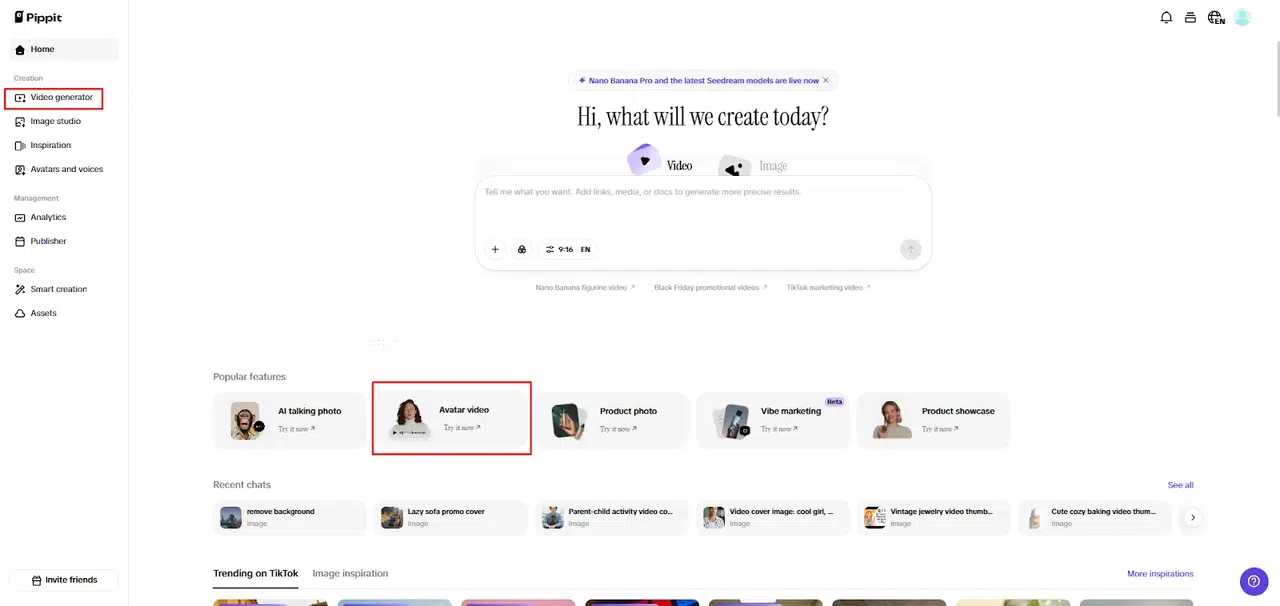

Step 1: Start with speech-ready avatar tools

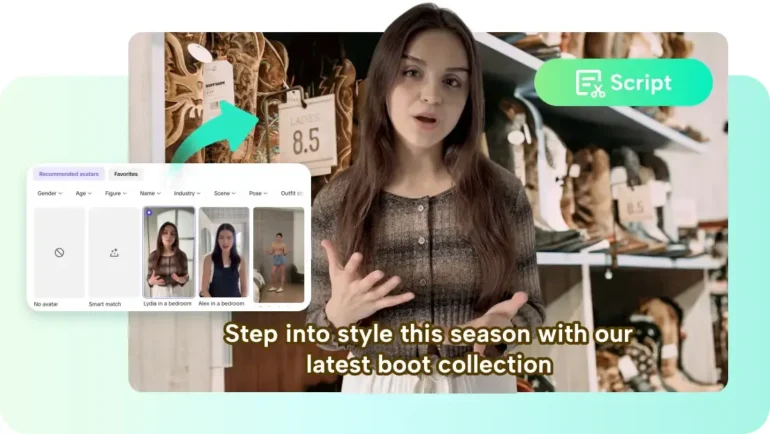

Access Pippit and click on “Video generator” from the left-hand menu. In Popular tools, select “Avatar video” to work with avatars that allow flexible speech speed and natural pauses without losing lip-sync accuracy.

Step 2: Modify script flow and delivery

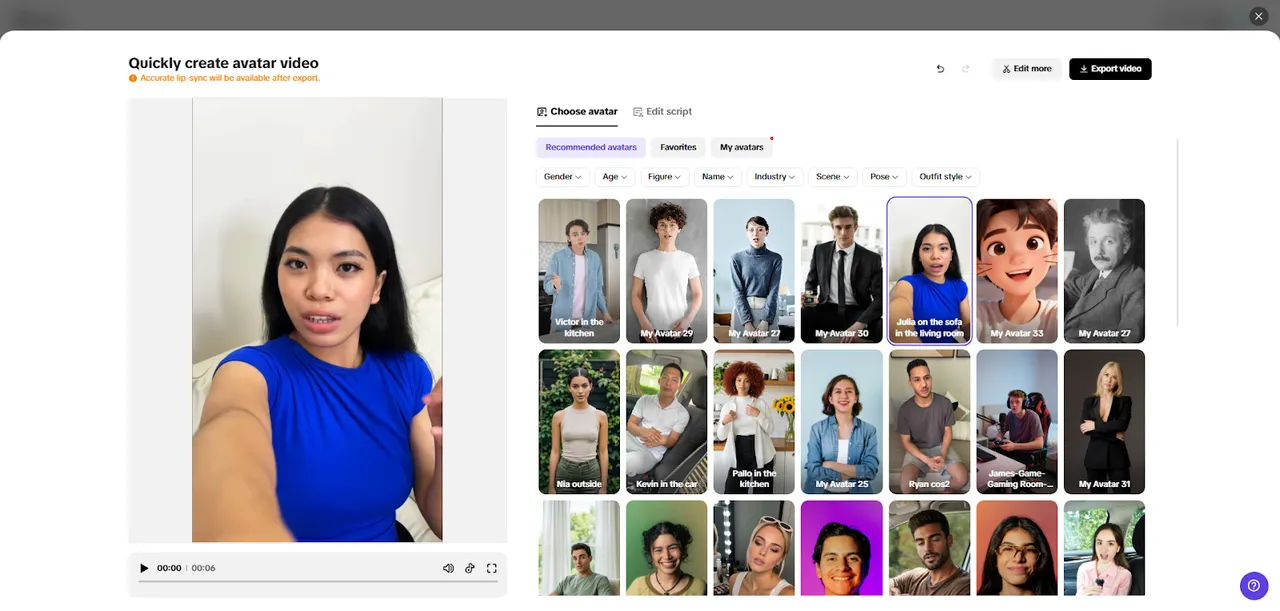

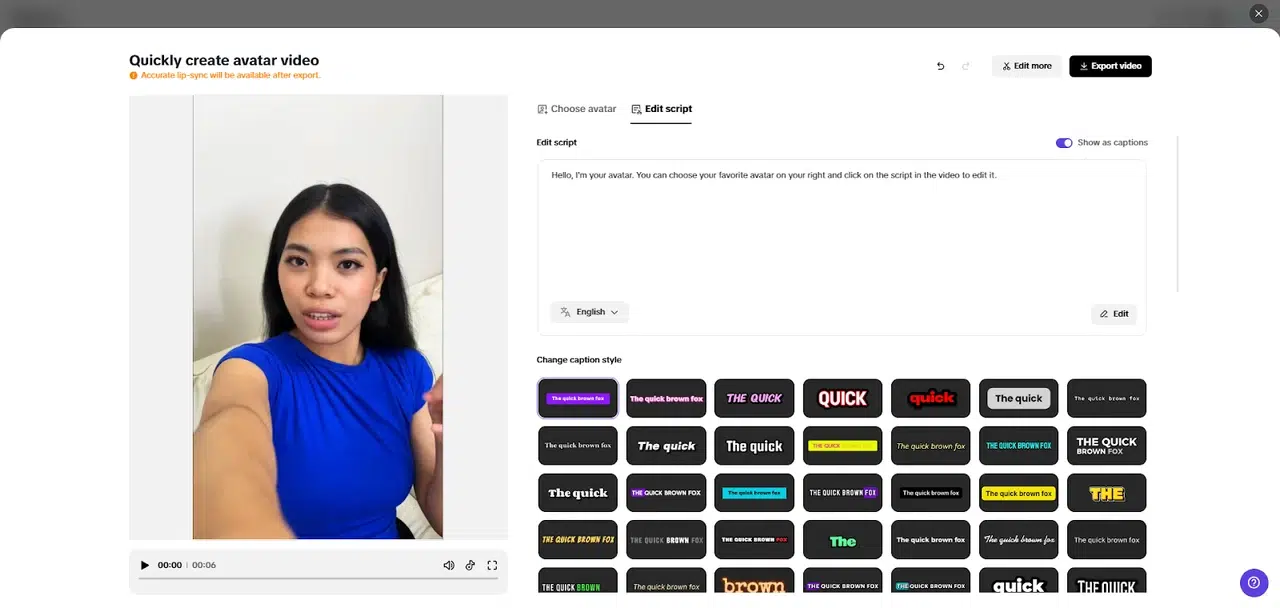

Choose an avatar from the “Recommended avatars” section using relevant filters.

Click “Edit script” to control speech pacing and insert pauses where needed. The avatar adapts smoothly, even with multilingual text. Scroll to “Change caption style” to ensure captions reflect the adjusted rhythm.

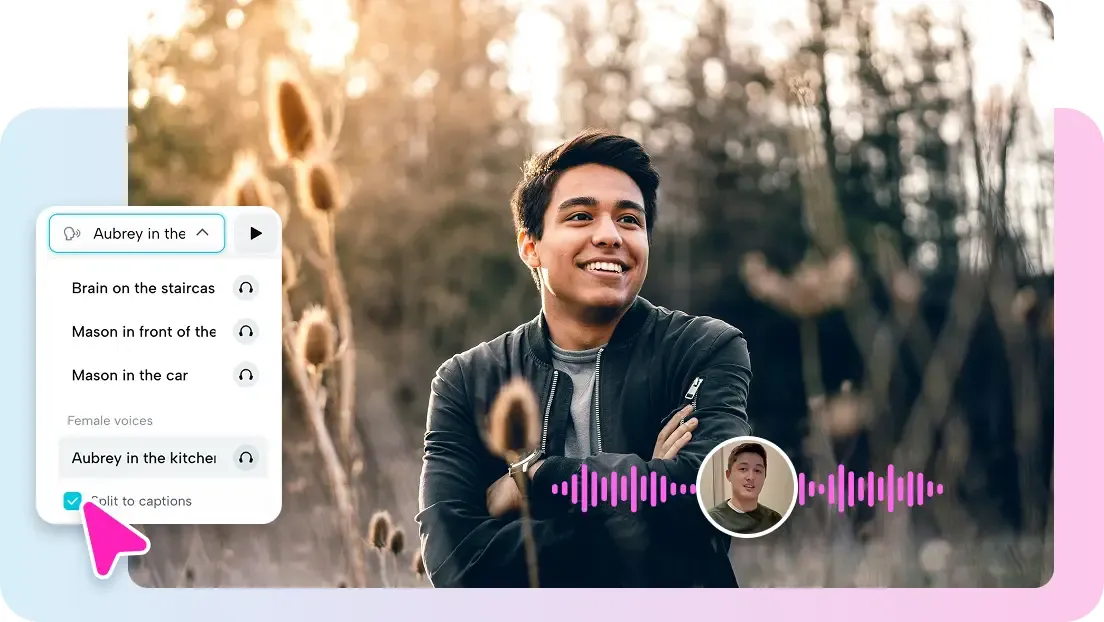

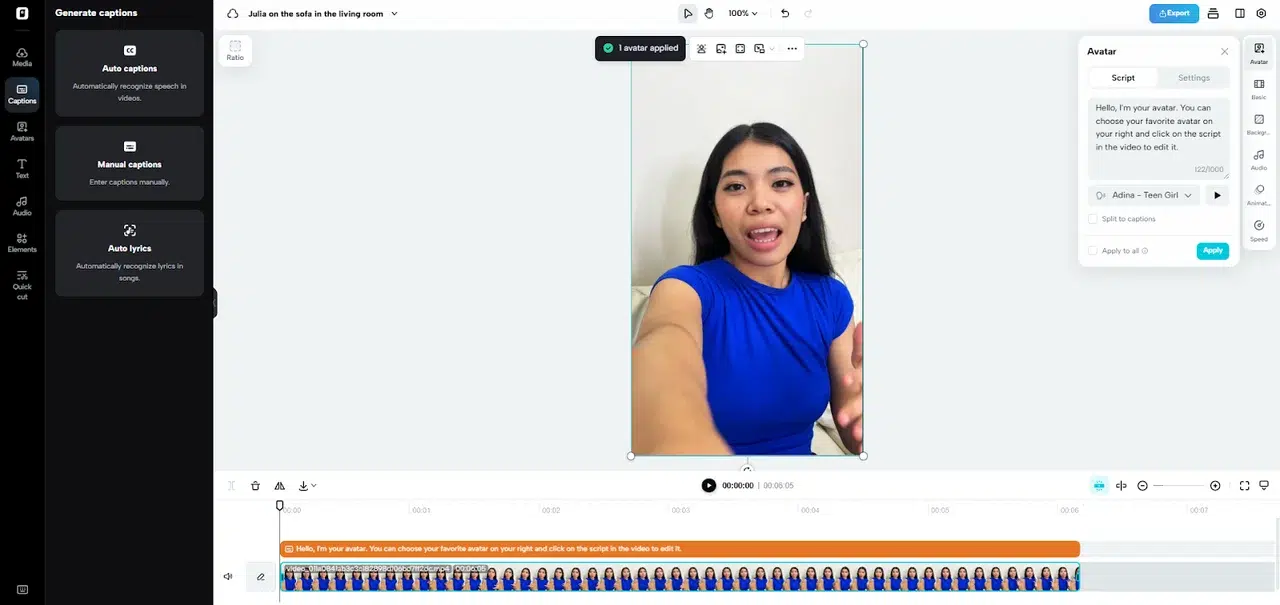

Step 3: Refine speech and distribute content

Click “Edit more” to fine-tune timing, polish expressions, and enhance realism. Add text overlays or music to support the speech flow.

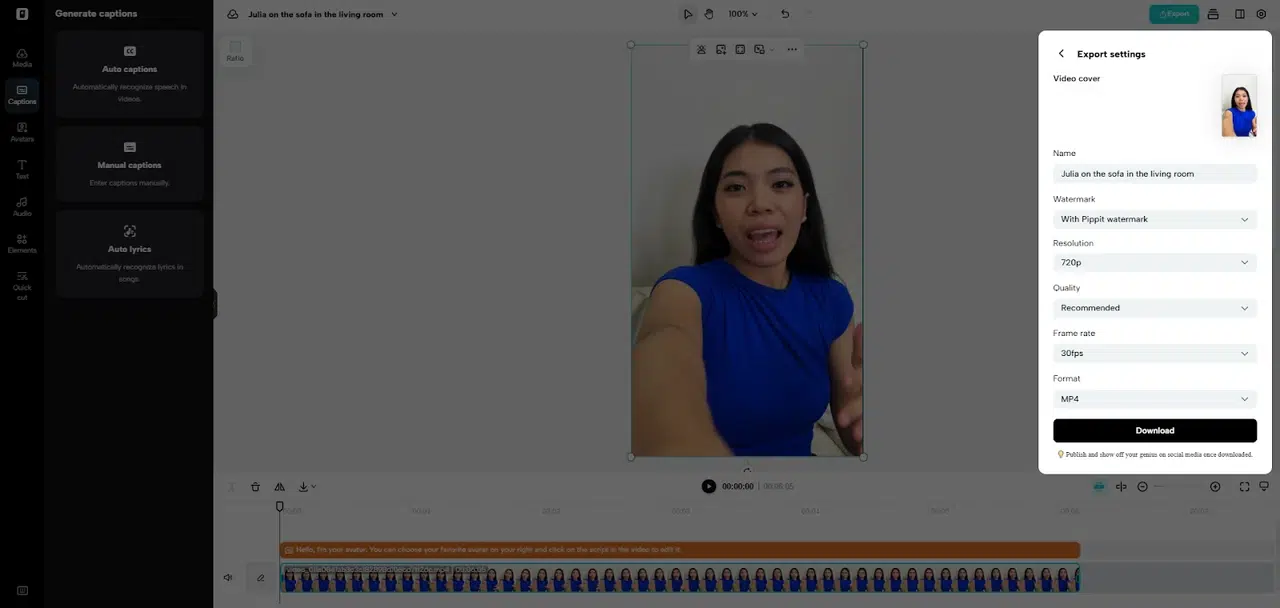

When satisfied, click “Export” to download the video. Use the Publisher feature to post on TikTok, Instagram, or Facebook, and review engagement data in Analytics to optimize future videos.

Exporting the video with optimized pacing ensures a polished, professional output suitable for any platform. These steps make photo to video AI workflows seamless and efficient.

Balancing Speed and Emotional Expression

The speed of speech and emotion must be an equal measure so that the viewer is not bored. Fast speech may be exciting or urgent, but it may be a sacrifice to clarity. Fewer pacing is more useful with instructional or teaching content, where listeners are able to follow the complex ideas. Emotional context should determine the timing of changes. Using the example, unexpected or awkward situations can be assisted with more extended pauses, and confident words can be said in a rush. The application of emotional signs and the controlled speed of speech makes the avatars appear familiar and more human, so they do not give the impression of a machine-like AI videos that have a slow pace.

Multilingual Speech Speed Considerations

Creation of content to be delivered to a global audience means considering the rhythm-related differences in language. Some languages have long syllables, and some are based on the fast-fire pronunciation. The accuracy of pronunciation and the variation of the speed are important to make it intelligible. There must also be knowledge of cultural requirements for slowing down conversation in multilingual speech. The international viewers can be addressed with the help of a considerate tempo and pauses to utilize the AI-generated videos. These parameters can be preserved by the tools of Pippit in a multitude of languages without losing lip-sync fidelity to enhance global reach and interactivity.

Avoiding Over-Processing Speech Timing

Over-manipulation of speech can lead to unnatural or robotic voices. The truthfulness is maintained by minor variations in timing and breaks. The adjustments are supposed to be broken by overuse, and the avatars appear to be unreal. It is also noteworthy that it is consistent between scenes. Rapid alternations in rhythm can confuse the audience and render it less immersive. Small and specific alterations carried out by creators ensure the natural message conveyed by avatars and a consistent visual and audio impression in the video. The best balance between technical accuracy and realism is found in moderate changes rather than extreme changes.

Conclusion

The control over the speech rate and pauses makes AI videos less automatic and involving. Changing the speed, regulating the tactical pauses, and coordinating the tactical pauses with the facial expression helps to understand it and be more interested in the viewer. Pippit AI video generator provides an easy-to-use platform for these refinements, including real-time refinements, user-friendly sliders, and instant previews.

With these tools, one can now create polished, professional, and emotionally expressive AI lip-sync videos at scale. Good speech control also enables avatars to talk in a natural manner, and the content to be more interesting to the audiences across the globe. Regardless of marketing, education, or entertainment, pacing improves the quality and effectiveness of AI-generated videos.